Key technologies:

Front end: React or Flutter and JavaScript-based visualisation such as d3.js and Observable.

Back end: vector database (chroma).

Machine learning: prompt engineering, agent orchestration framework (such as LangChain/LangGraph), and MCP.

Background

AI has been behind some of the recent scientific breakthroughs. One of the most prominent examples is the 2024 Nobel Prize winning work of using machine learning to predict protein 3D structure, which is fundamental in biology/medicine and remains unsolved for decades.

AI has also been used to speed up scientific discovery by automating some parts of the process. The extreme example is the AI Scientist by sakana.ai that with some initial input, can generate ideas, write the necessary code, design and perform evaluation, and finally write up the results as a scientific publication. Below is what the paper looks like.

Project Idea

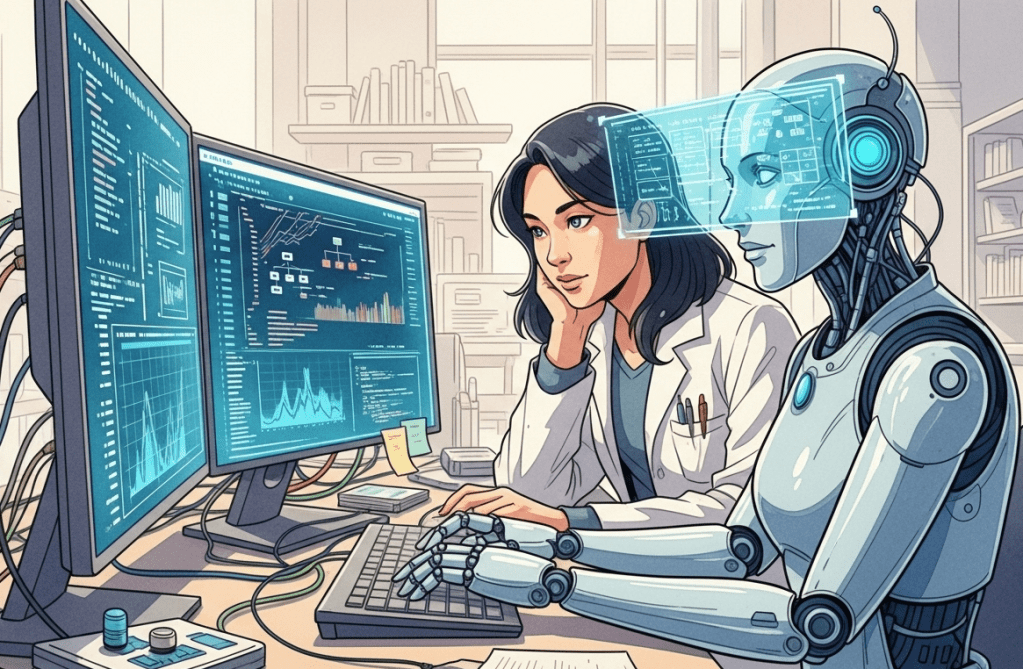

I think the sweet spot is somewhere in the middle where human has the control and AI does the time-consuming part to dramatically improve the discovery quality (like the Nobel prize) and speed (through automation). The different stages of the scientific research will need different AI support, from literature research, generate hypotheses, to implementation and testing, and finally even writing up. Also, the pipeline can be quite different for different disciplines, from computer science, material engineering, to social science.

Idea 1: Literature Research

Literature research is usually the first stage of any scientific research, to understand the state-of-art solutions (the starting points) and the latest research development in the relevant fields (inspiration for new solutions).

There are guidelines, such as PRISMA, to help write a good literature review. However, these mainly covers ‘what’ should be included but not ‘how’ to create them (e.g., how to collection and analyse papers). These alone is not sufficient for LLM help conduct literature review.

LLM would need more detailed instructions than ‘write a literature review on topic XXX’ such as what are the different sections of a literature review, how to collect and analyse required information, and finally how to write each sections and connect them together.

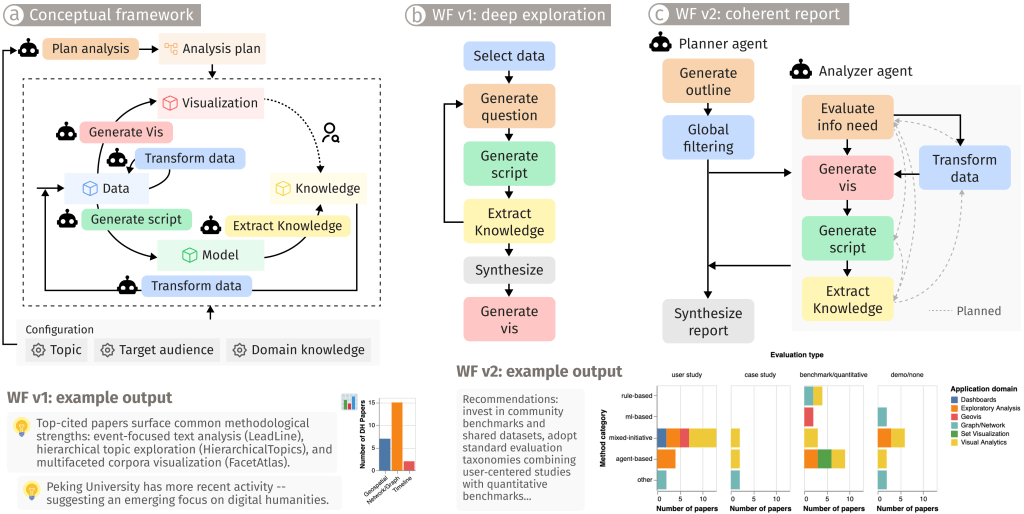

This usually requires a sophisticated agentic workflow, similar to the ones used in the AI Scientist by sakana.ai mentioned earlier. This is where agent orchestration framework (such as LangChain/LangGraph) and MCP (model context protocol) come into play. The former allows the constructions of complex with multiple collaborating agents, and the latter allows agents to go to different resources to find relevant information and select the right tool for different tasks. This relates to the recent Agentic Visualisation Challenge. Below is the workflow use in our entry.

Related to this, an user interface is needed to allow user control of the different parts of this agentic workflow. Otherwise, agents can easily get stuck and repeating the same attempts without making any progress.

This relates to the Vitality 2 project, so worth having a look there as well.

consensus.app seems to be making good progress on something related. This is a report it generates for the topic of ‘time-series anomaly detection’ and it is getting close to a literature review.

Idea 2: Qualitative Research

Qualitative research is mostly for non-structured data (such as text) and the analysis usually does not involve numerical computation (this is the quantitative analysis). It is used in many disciplines such as Human-Computer Interaction (HCI), Health, Psychology, Sociology, Law, and Business. For example, in a study to understand the attitude of general public towards LLMs, the researchers may interview many participants and build up a large collection of transcripts from the interviews. The researchers need to extract information such as the user type, the application domain, and the user attitudes.

Currently such information has to be extracted manually, often following a ‘coding scheme’ that defines the types of user, application, and attitude. For example, the ‘user attitude’ can be ‘positive’, ‘negative’, and ‘neutral’ (the ‘coding scheme’), and someone needs to read the transcript to decide this for each participant. It can take several hours to ‘code’ one-hour interview transcript. This makes such research very time consuming (weeks or months just to code the transcripts) and significantly limit the power of analysis (larger number of interviews allows the discovery of patterns that are more general and less likely to miss rare but important cases).

The project idea is to support such analysis with LLMs:

- Can we give a LLM the coding scheme and the transcript and ask it do the ‘coding’?

- How can we make the LLM better understands user’s analysis goal, i.e., what information users want to extract?

- How to make this process easier for users not familiar with LLM? A psychology probably can’t write code to use LLM, and the commercial web interface, such as chatGPT, is not ideal for data analysis.

The goal is not to fully automate the analysis, as LLM is still not as good as (domain) experts for many tasks. Instead, the approach is to produce a first draft result (by the LLM) and then the users/experts can further refine it to get the final results. The can hopefully reduce the overall efforts to half, quarter, even 1/10 of what manual analysis requires. This is the Human-AI Collaboration method.

This will be a collaboration with the Visualisation Centre at the University of Stuttgart. One of the tools they developed can be potentially used to support such work.

- This is the paper describing the tool and there is also a video below (trying to fix the link at the moment). The example used in the paper is to categorise games: here the ‘game types/genres’ are the ‘coding scheme’, and deciding which type(s)/genre(s) a game belongs to is the ‘coding’ process.

- Currently it does not use LLM and one of the main goals of this project is to integrate LLM into this tool. The source code is available here.

- Some related work and notes (in a Google Doc).

Idea 3: Quantitative Research

Quantitative research mainly involves numerical computation and common in discipline such as Computer Science, Engineering, Math, and Physics. It is possible to use Generative AI to support such research as well, but the support will be quite different because of the nature of the research is quite different from that of qualitative research. Currently there is no concrete idea yet, partly because there is no user partner yet. More information will be added as the project progresses.

Resources

- Short course on Agentic AI (by Andrew Ng, first available Oct 2025)

You must be logged in to post a comment.